An Interactive Musical Prediction System with MDRNNs

Dr Charles Martin - The Australian National University

web: charlesmartin.au mastodon: @charlesmartin@aus.social

What is this?

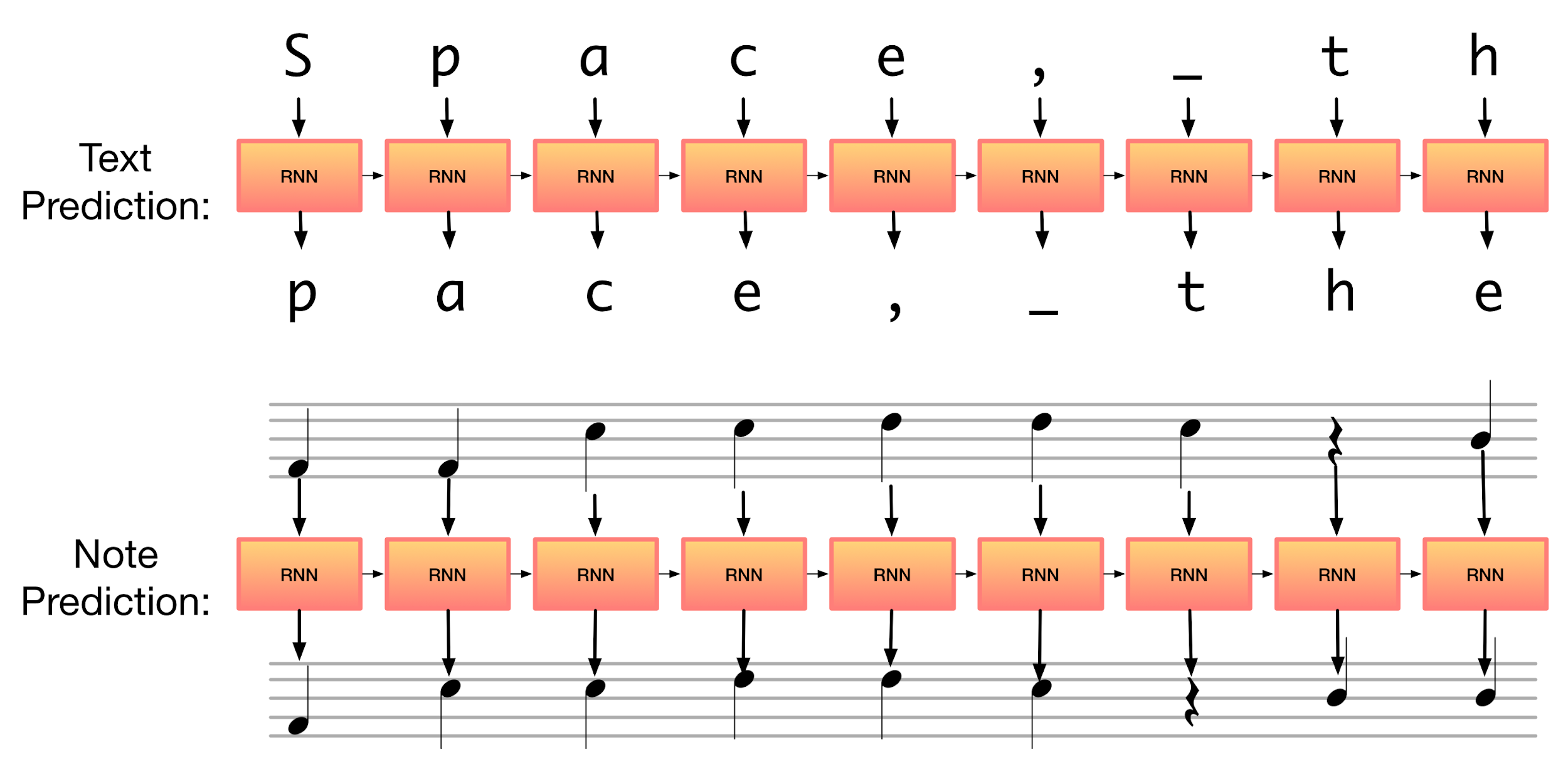

Learning to Predict Sequences

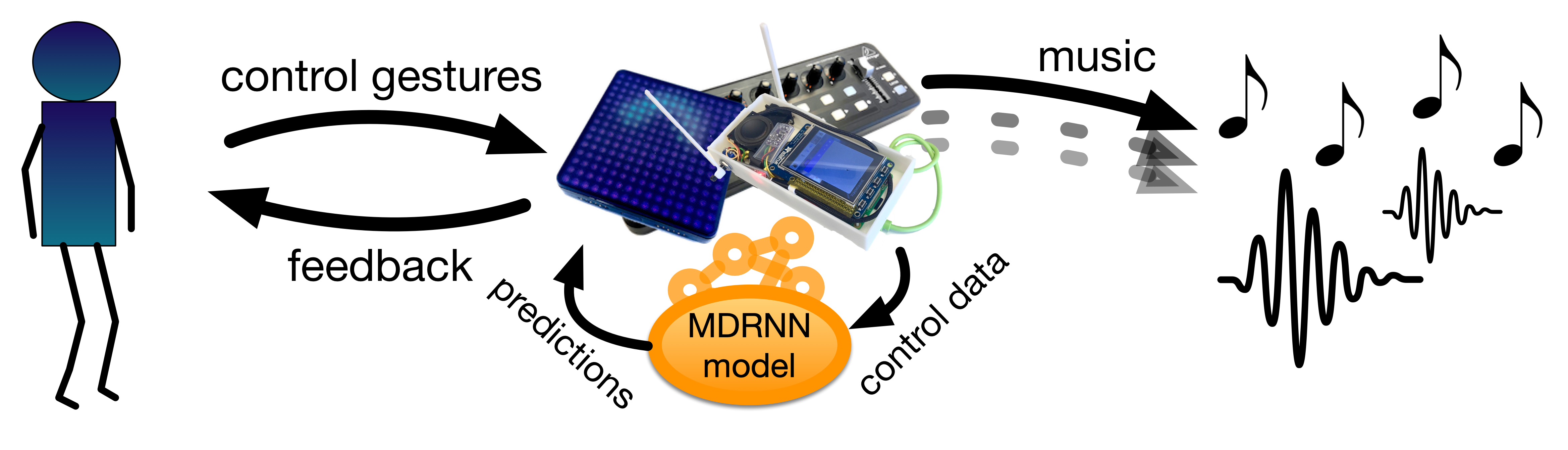

Interacting with Musical Predictions

Why is this needed?

| Creative Deep Learning Systems | NIMEs |

|---|---|

| Focus on MIDI data (e.g., Magenta Studio) | Yes MIDI, but also many custom sensors |

| Focus on digital audio | Focus on performer gestures |

| Focus on composition/artefact generation | Focus on interaction |

| Rhythm on 16th note grid | Complex or no rhythm |

| Focus on categorical data | Continuous data more interesting |

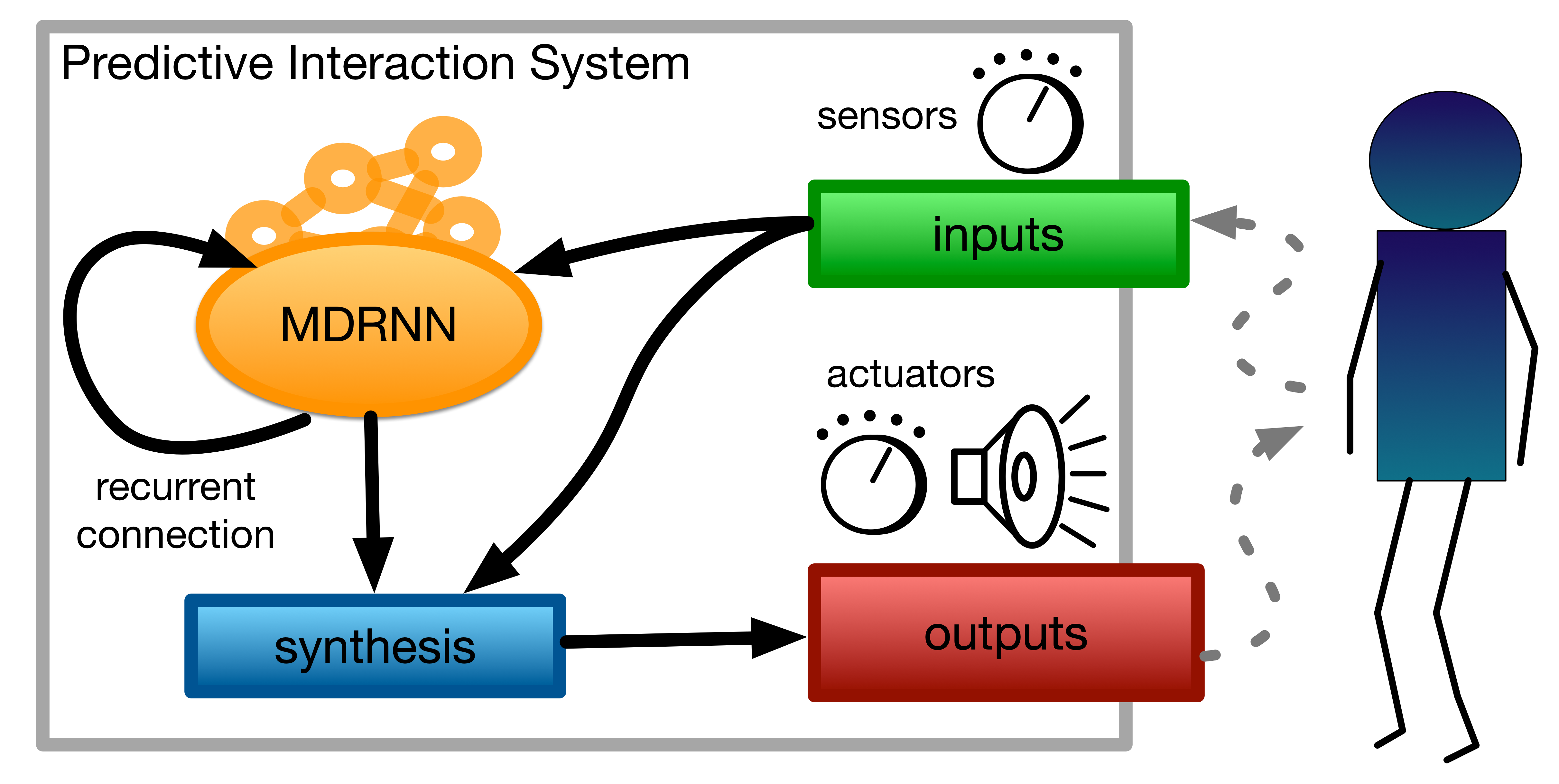

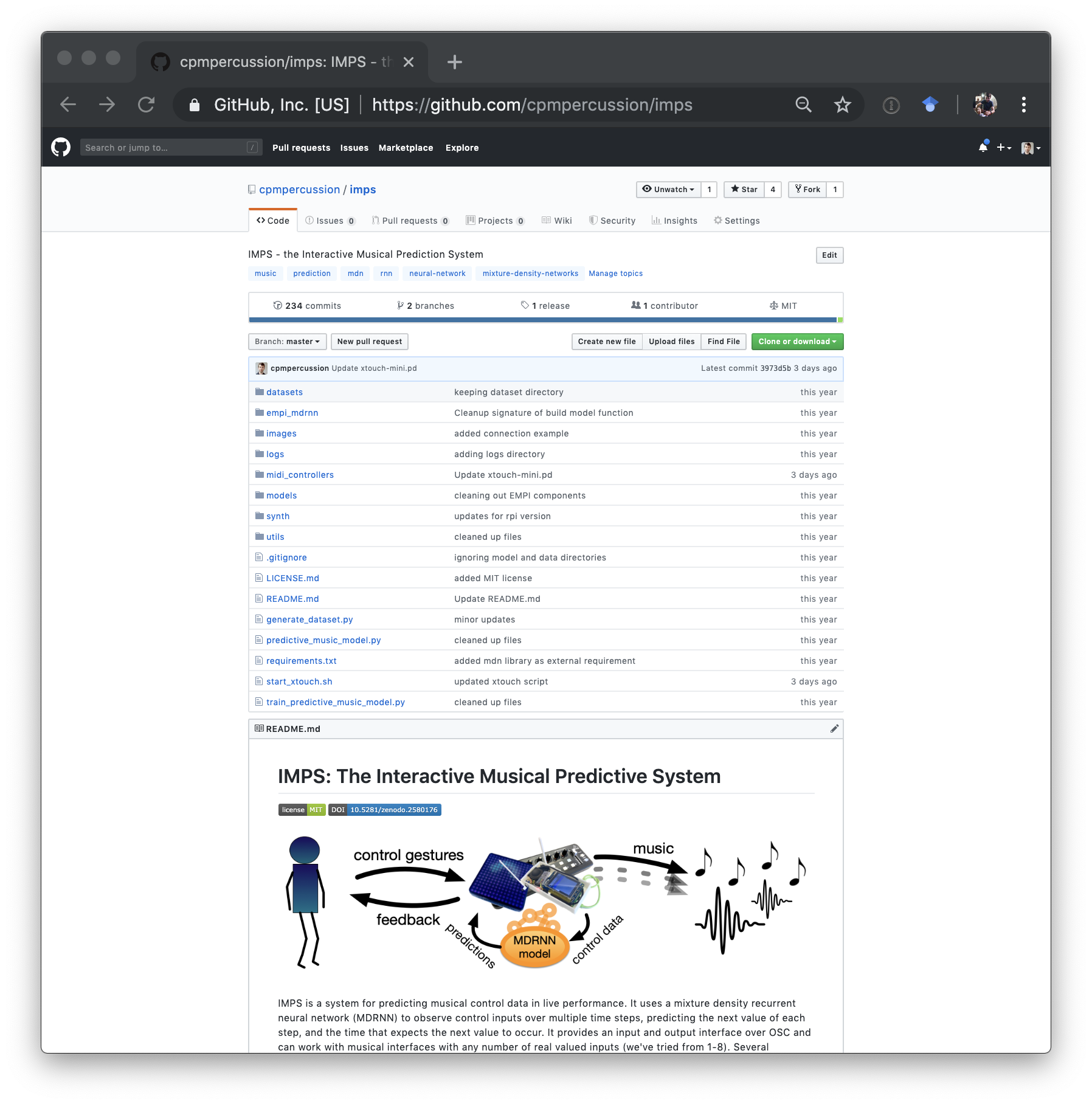

IMPS: Interactive Musial Prediction System

- An opinionated deep learning model for NIMEs

- An environment for making NIMEs that play themselves

- “Wekinator” for deep learning?

How does it work?

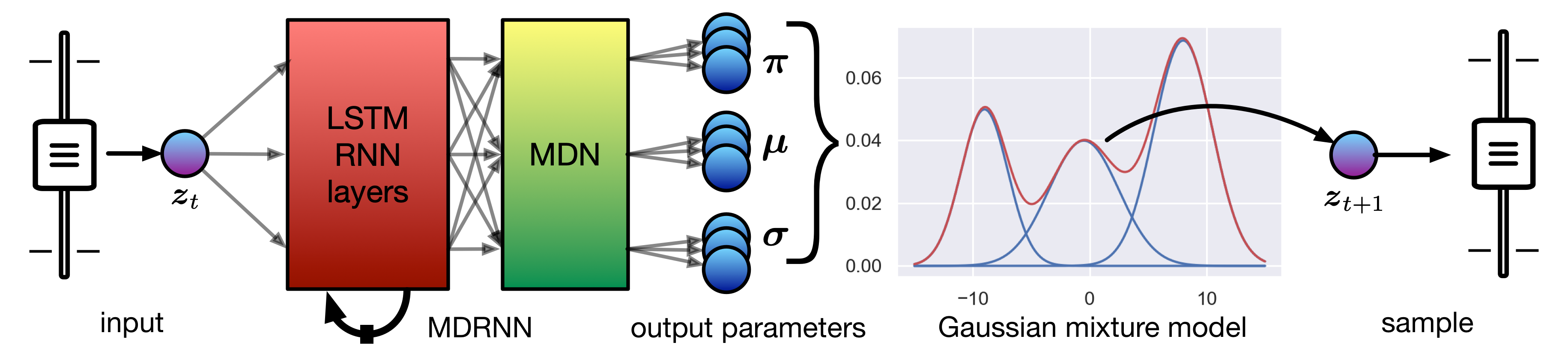

Mixture Density Recurrent Neural Network

Mixture Density RNN

Good at predicting creative, continuous, multi-dimensional data: handwriting, sketches… musical gestures?

What to do with predictions?

- Call-and-Response: Continue gestures when performer stops

- Layered predictions: Always predict next move from current gesture

- Duet: Two interdependent processes

Ok, how do I use it?

Three easy steps…

- Collect some data: IMPS logs interactions automatically to build up a dataset

- Train an MDRNN: IMPS includes good presets, no need to train for days/weeks

- Perform! IMPS includes three interaction modes, scope to extend in future!

Is this even practical?

Deep Learning in NIMEs??

- Is it practical for real-time use?

- How do the MDRNN parameters affect time per prediction?

- What are “good defaults” for training parameters?

- Do you need a powerful/expensive computer?

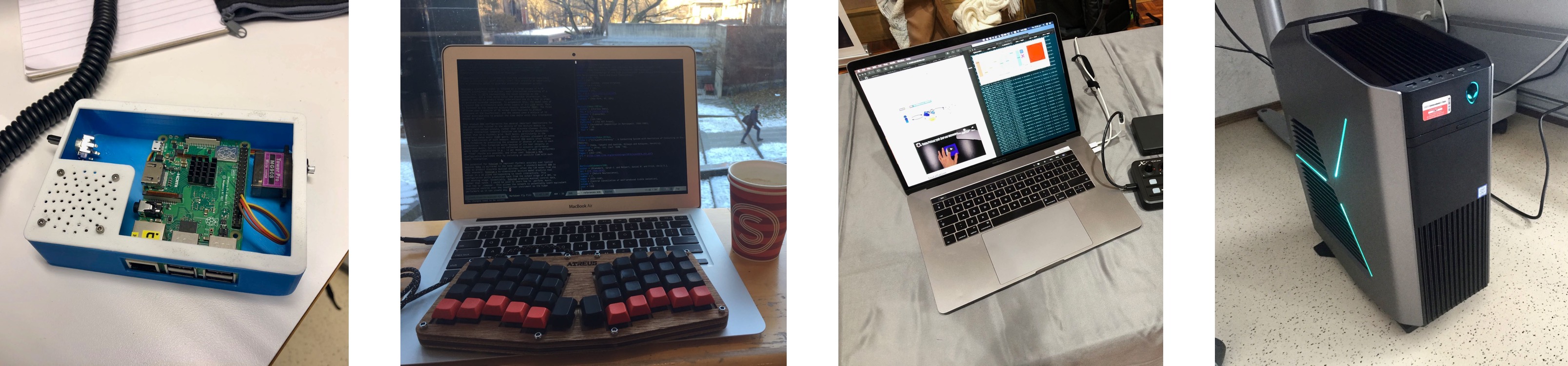

Test Systems

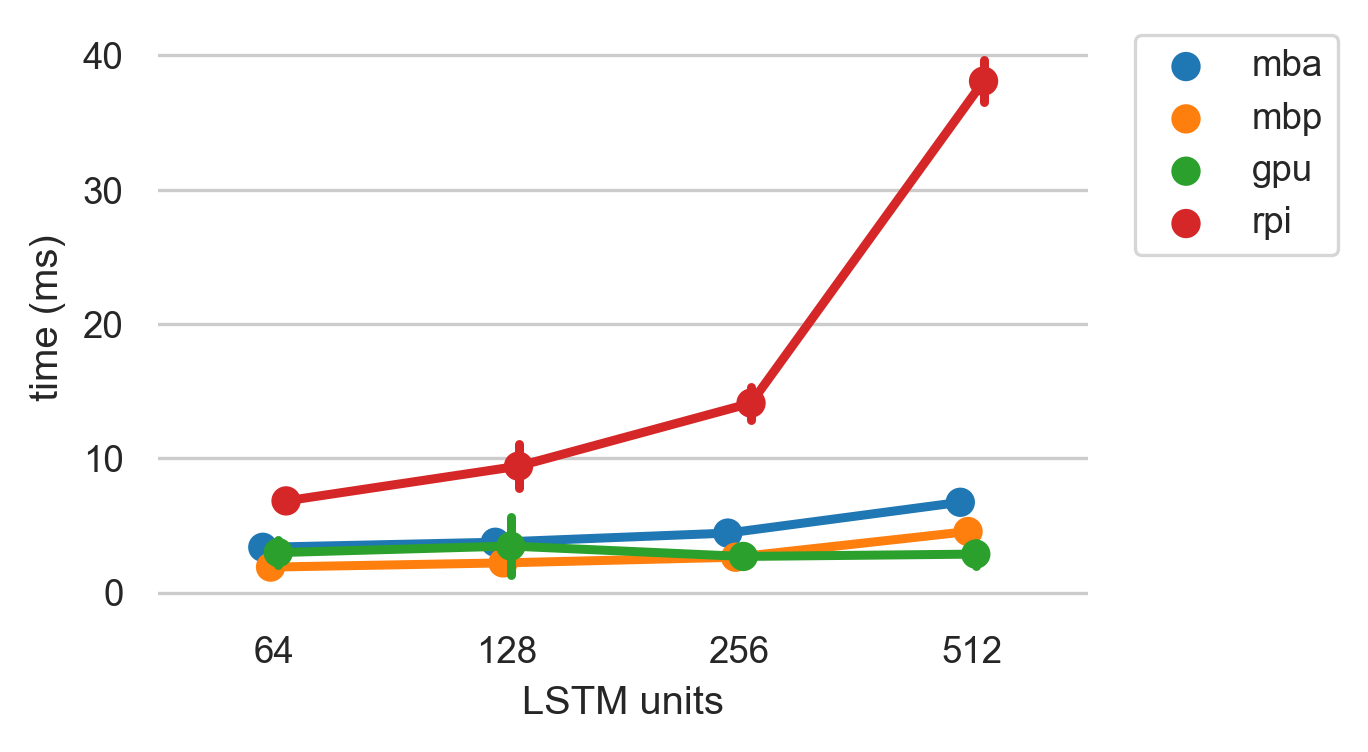

Results: Time per prediction

Time per prediction (ms) with different sizes of LSTM layers.

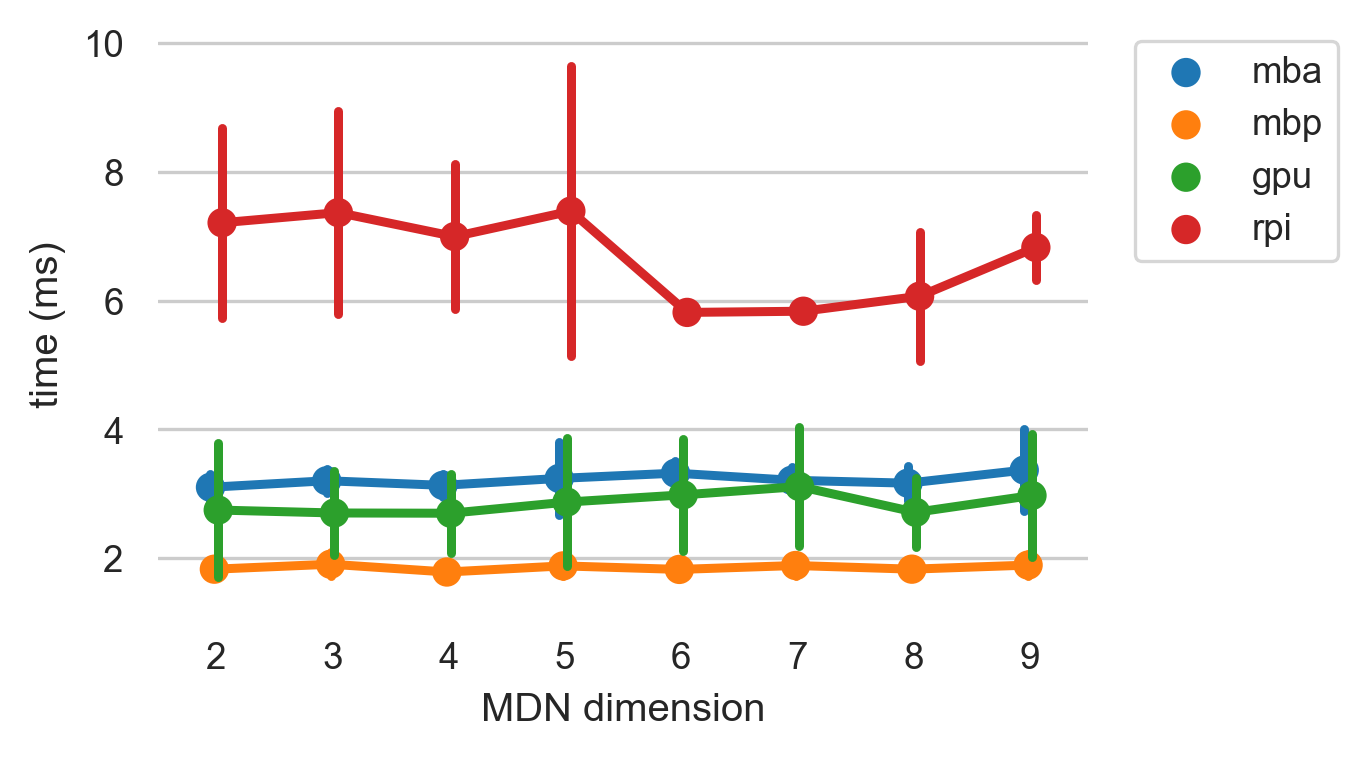

Results: Time per prediction

Time per prediction (ms) with different MDN output dimensions. (64 LSTM units)

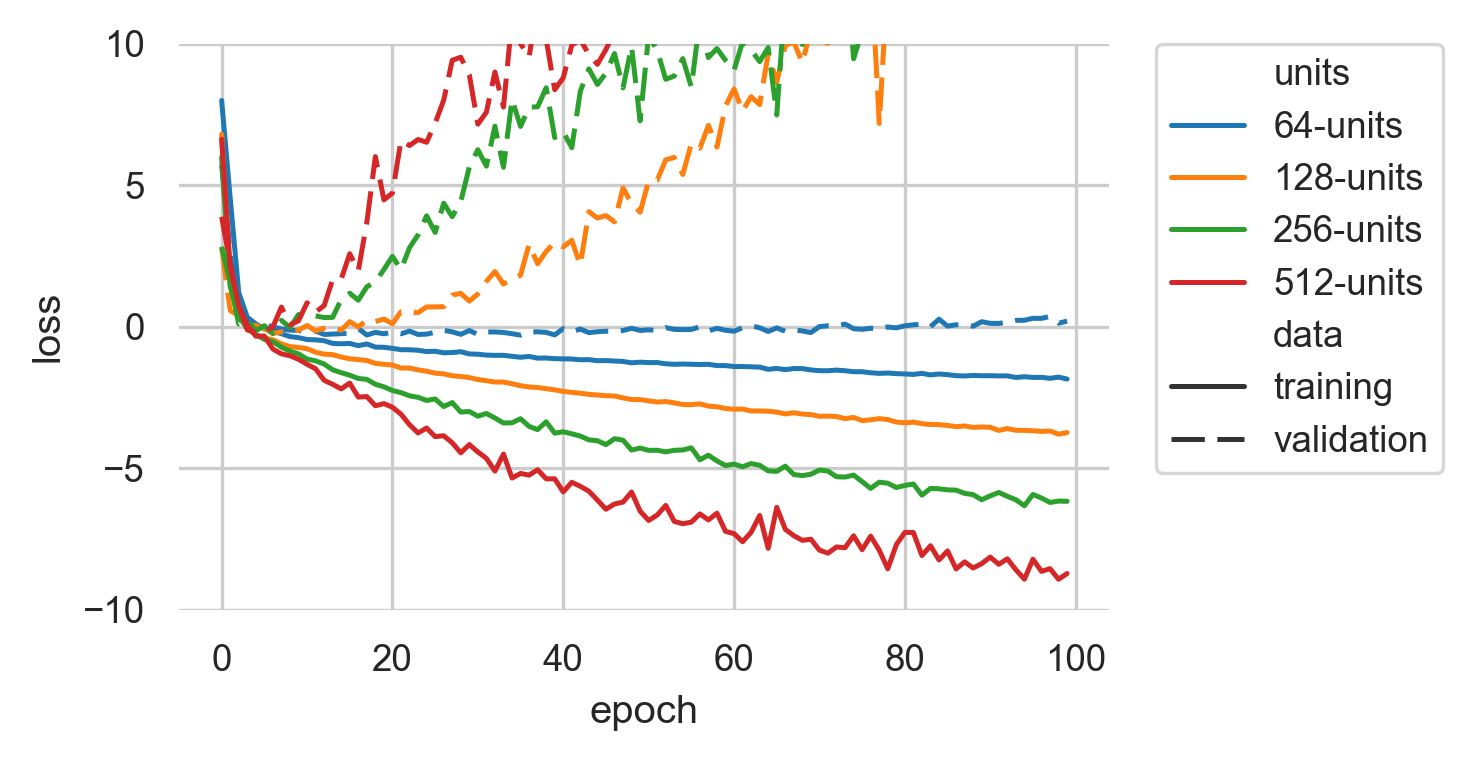

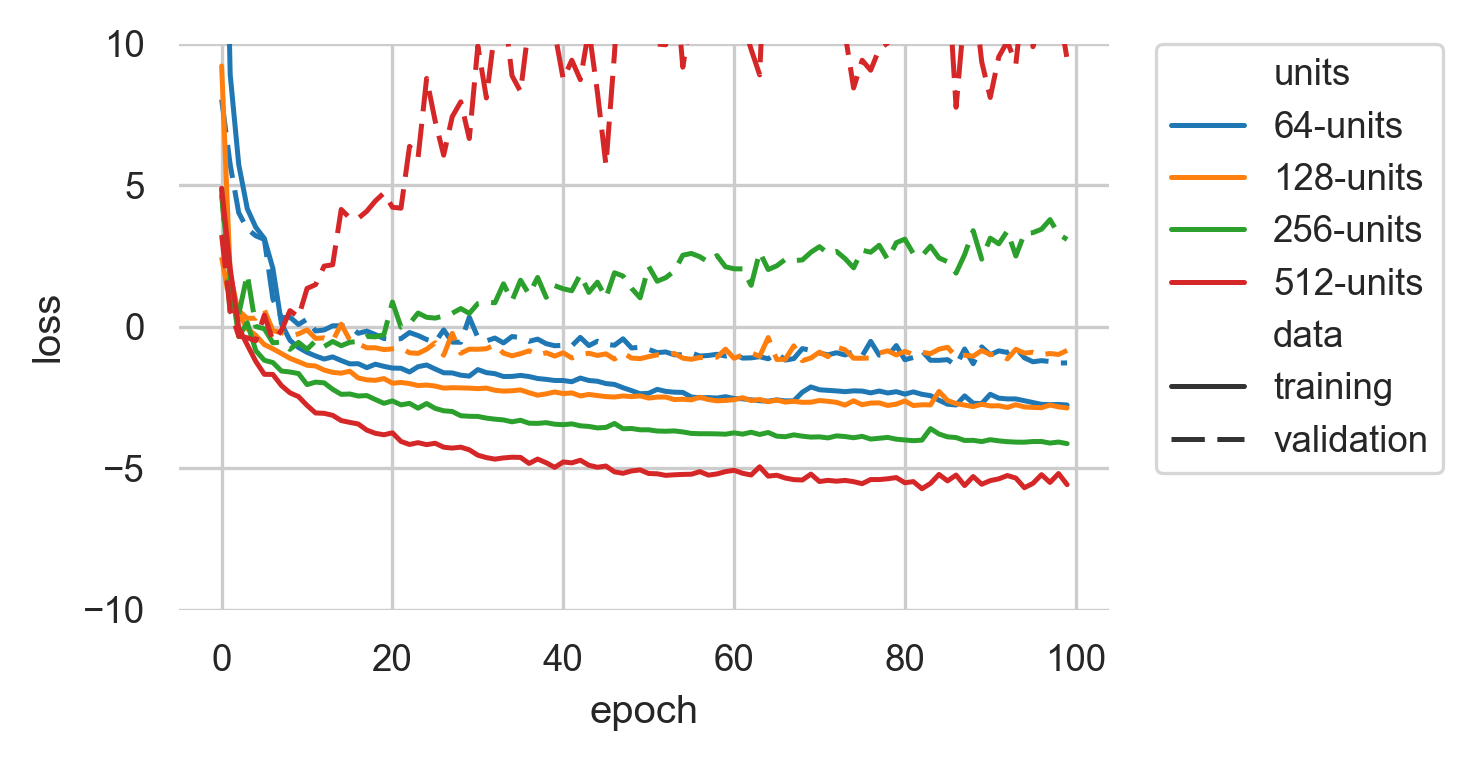

Results: Training Error vs Validation Set Error

12K sample dataset (15 minutes of performance)

Takeaway: Smallest model best for small datasets. Don’t bother training for too long.

Results: Training Error vs Validation Set Error

100K sample dataset (120 minutes of performance)

Takeaway: 64- and 128-unit model still best!

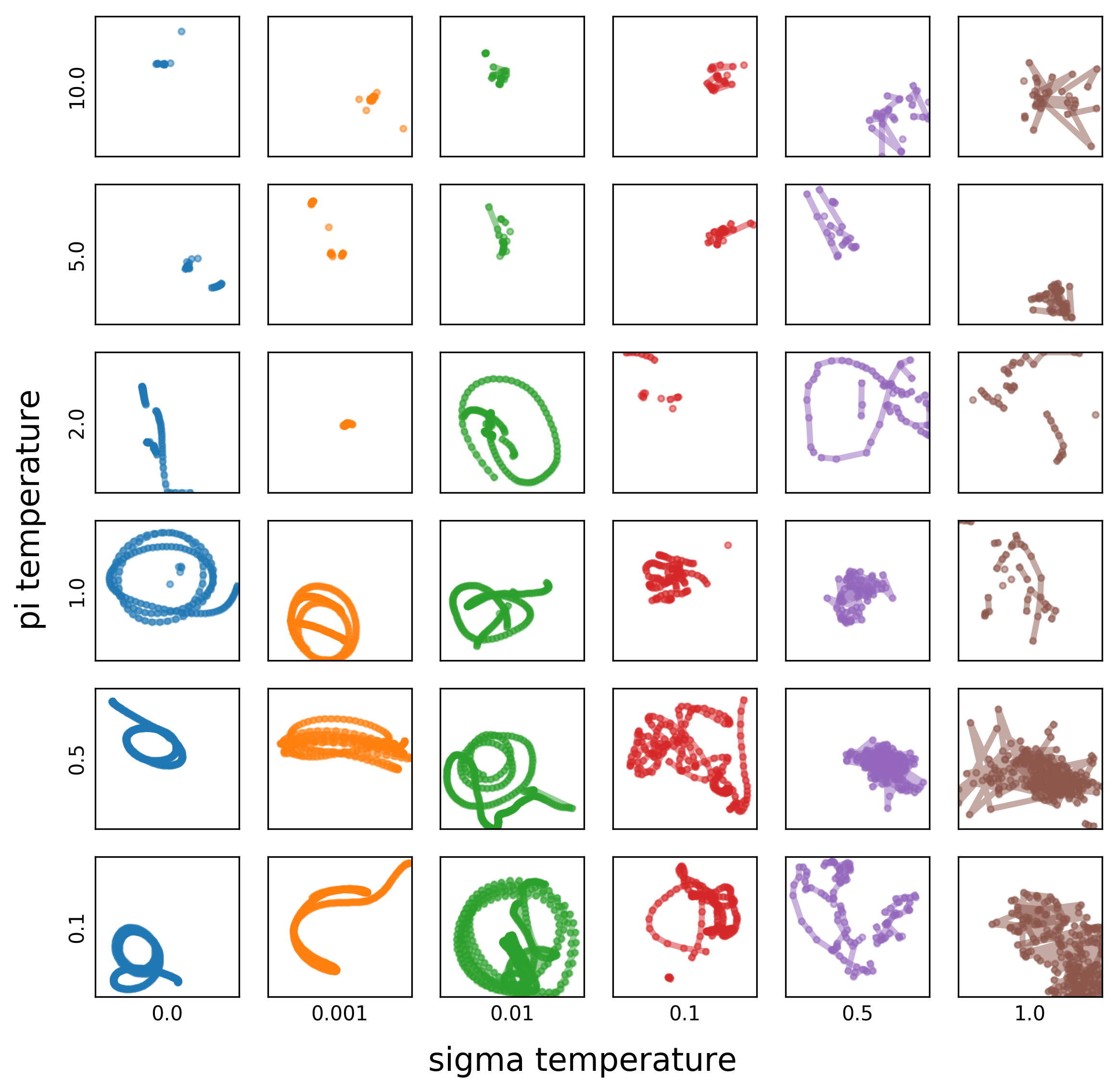

Results: Exploring Generation

Takeaway: Make Gaussians less diverse, make categorical more diverse.

Try it out!

- Available on GitHub

- Try with your NIMEs!

- Hack if you want!

- Add an issue with problems/results!

Twitter: @cpmpercussion

Website: https://cpmpercussion.github.io/creative-prediction/